NLP (Variational Inference)

#1. Embedded Topic Modeling (ETM)

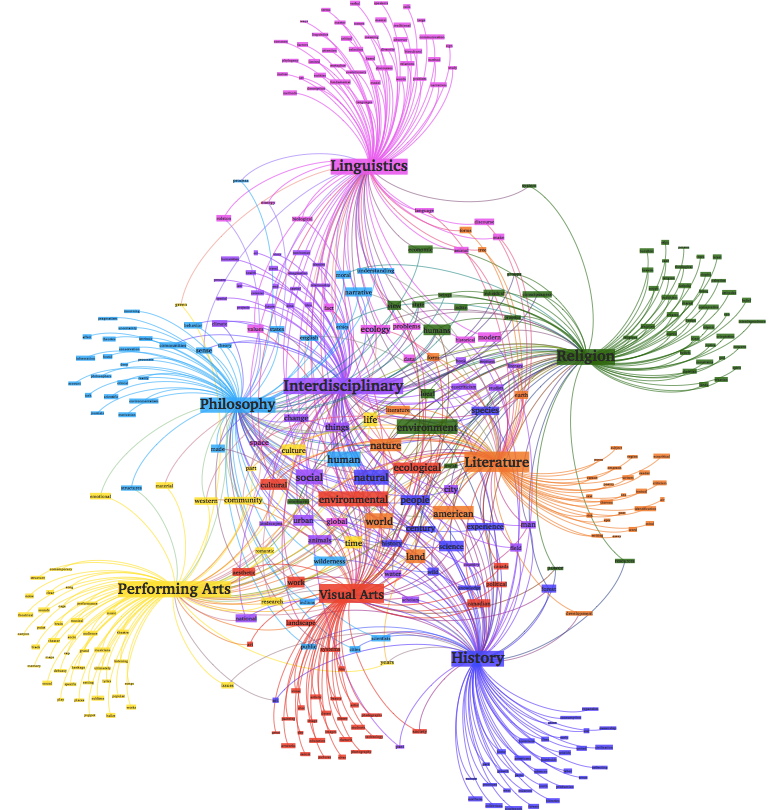

I am thrilled to showcase a project that highlights my use of innovative techniques to enhance topic modeling within embedding spaces. My approach involved utilizing word embeddings and assigning topic embeddings, which led to a more robust representation of topic distribution.

To further enhance model accuracy and fit, I seamlessly incorporated a Variational Autoencoder to approximate the posterior distribution. A crucial component of this project included conducting a thorough assessment, where I compared the performance of the LDA Model to that of the ETM Model. I applied rigorous metrics such as Topic Perplexity and Topic Diversity to meticulously evaluate their effectiveness. This detailed evaluation enabled me to consistently identify the model that excelled in precise topic extraction. This project underscores my commitment to leveraging state-of-the-art methods to advance topic modeling and extract valuable insights from textual data.